Decoding The Digital Jumble: Understanding & Fixing Character Encoding Errors

Have you ever opened a webpage, an email, or a document only to be greeted by a chaotic string of characters like ठचडी हब4यू, ã«, ã, ã¬, ã¹, or ã instead of the expected text? This phenomenon, often dubbed "mojibake," is a common digital headache that can render perfectly good information utterly unreadable. It's a frustrating experience that can impact everything from your daily browsing to critical data exports, leaving you wondering why your digital world suddenly decided to speak in an alien tongue.

This article will demystify these perplexing character displays, diving deep into the world of character encoding. We'll explore why these strange combinations appear, using real-world examples from web pages, databases, and emails. More importantly, we'll equip you with the knowledge and practical steps to diagnose, fix, and prevent these encoding glitches, ensuring your digital text always appears exactly as intended.

- Baashaal Telegram

- Wwxx Com Reviews 2024

- Sani Peyarchi 2025 To 2027

- Securely Connect Remote Iot Vpc Raspberry Pi Aws Server

- Jayshree Web Series

Table of Contents

- The Root of the Problem: What is Character Encoding?

- Unmasking Mojibake: Why ठचडी हब4यू Appears

- The Universal Language: Embracing Unicode and UTF-8

- Practical Solutions: How to Fix ठचडी हब4यू and Other Encoding Glitches

- Preventing Future Mojibake: Best Practices

- Beyond the Bytes: The Importance of Correct Character Display

- Conclusion: Mastering the Digital Alphabet

The Root of the Problem: What is Character Encoding?

At its core, a computer doesn't understand letters, symbols, or emojis in the way humans do. It only understands numbers – specifically, binary numbers (0s and 1s). To display text, computers rely on a system called "character encoding," which is essentially a map that assigns a unique number to every character. When you type the letter 'A', the computer stores a specific number, and when it displays 'A', it looks up that number in its map to know which visual representation to show.

The earliest and most fundamental encoding standard is ASCII (American Standard Code for Information Interchange). ASCII uses a single byte (8 bits) to represent characters, but only the first 128 values (0-127) are used for common English letters, numbers, and basic symbols. As the "Data Kalimat" points out, "When a byte (as you read the file in sequence 1 byte at a time from start to finish) has a value of less than decimal 128 then it is an ascii character." This simplicity worked well for English, but as computing became global, the need for characters from other languages – Cyrillic, Arabic, Chinese, Japanese, and many more – quickly outgrew ASCII's limited capacity.

This led to the development of various extended ASCII encodings (like ISO-8859-1 for Western European languages or Shift_JIS for Japanese), each attempting to cram more characters into the byte space. However, using different encodings meant that a document created in one encoding might appear as gibberish in another. The solution to this chaos was Unicode. Unicode is a universal character set that aims to assign a unique number (a "code point") to every character in every language, including symbols, emojis, and historical scripts. To store these vast numbers of code points efficiently, various "Unicode Transformation Formats" (UTFs) were created, with UTF-8 being the most widely adopted and flexible. UTF-8 is a variable-width encoding, meaning common ASCII characters use just one byte, while more complex characters use two, three, or even four bytes. This efficiency and backward compatibility with ASCII made it the de facto standard for the internet.

Unmasking Mojibake: Why ठचडी हब4यू Appears

The appearance of garbled text, often resembling patterns like ठचडी हब4यू, ã«, ã, ã¬, ã¹, or ã, is almost always a symptom of an encoding mismatch. This happens when text encoded in one character set (e.g., UTF-8) is interpreted by a system or application expecting a different character set (e.g., Shift_JIS or a non-UTF-8 legacy encoding). The system reads the bytes, tries to match them to its incorrect map, and produces nonsensical characters. It's like trying to read a French book with an English-to-German dictionary – the words simply don't align, and the output is a jumbled mess.

Let's break down some typical scenarios where this digital confusion, manifesting as strange character display, commonly occurs:

Scenario 1: Web Pages and HTTP Headers

A very common place to encounter characters like "ã«", "ã", "ã¬", "ã¹", "ã" is on webpages. The "Data Kalimat" mentions, "My page often shows things like ã«, ã, ã¬, ã¹, ã in place of normal characters,I use utf8 for header page and mysql encode." This scenario highlights a classic problem: the server or the HTML document claims to be UTF-8, but somewhere along the line, the browser isn't getting the memo, or the data itself was already corrupted. Browsers rely on HTTP headers, specifically the `Content-Type` header (e.g., `Content-Type: text/html; charset=UTF-8`), or a `` tag within the HTML to understand how to interpret the page's bytes. If these declarations are missing, incorrect, or overridden by a conflicting setting, the browser defaults to its own best guess (often a local legacy encoding), leading to mojibake. For instance, the provided Japanese text describes this perfectly: "いずれも、言語といえば欧米系ラテン文字のものしかないという認識の欧米圏発祥のシステムを国際化したが、UTF-8に対応しきれていないときに、よく表れる症状です。Safariがらみで見ることが多いのではないでしょうか。 UTF-8文字列をShift_JISとして解釈したケース。" This translates to: "In all cases, these are common symptoms that appear when a system originating from Western countries, which only recognizes Western Latin characters, has been internationalized but hasn't fully supported UTF-8. You often see this with Safari. This is a case where a UTF-8 string is interpreted as Shift_JIS." This perfectly illustrates how a browser like Safari, expecting a certain encoding, misinterprets UTF-8 bytes, leading to the ठचडी हब4यू type of display.

Scenario 2: Database Encoding Mismatches (MySQL)

Databases are another hotbed for encoding issues. The "Data Kalimat" provides a clear example: "I have been given an export from a mysql database that seems to have had it's encoding muddled somewhat over time and contains a mix of html char codes such as &". This suggests that the data itself, when stored or retrieved, wasn't handled consistently. MySQL, like many databases, has multiple levels of character set configuration: the server, the database, the table, and even the column level. Crucially, the connection between the client (your application) and the database also has its own character set. If data is inserted as UTF-8 but the connection is set to Latin1, or if a database table is created with one encoding and then data from another encoding is dumped into it, corruption is inevitable. When you then try to read this data, especially if it contains non-ASCII characters, you'll see the tell-tale signs of ठचडी हब4यू or other garbled sequences.

Scenario 3: File Encoding and Text Editors

Even simple text files can fall victim to encoding problems. If a file is saved by a text editor in one encoding (e.g., ANSI or a specific regional encoding) but then opened by another application or system expecting UTF-8, you'll see mojibake. This is particularly true for configuration files, code snippets, or data exports. The "Data Kalimat" also mentions, "I get this strange combination of characters in my emails replacing '," which points to email clients misinterpreting the encoding of the message body. Email protocols have their own ways of declaring content types and character sets, and if these are incorrect or ignored, the recipient's client will display the garbled text. The phrase "Get to know a character it can be useful to know your characters, but more practically useful to know one character well" subtly suggests understanding how individual bytes are interpreted, which is key to debugging file-level encoding issues.

The Universal Language: Embracing Unicode and UTF-8

Given the complexities and pitfalls of legacy encodings, the clear solution for modern digital communication is the consistent adoption of Unicode, specifically UTF-8. UTF-8's design makes it incredibly versatile and robust. As the "Data Kalimat" highlights, "Use this unicode table to type characters used in any of the languages of the world." This underscores Unicode's primary goal: to provide a unique identity for every character, regardless of language or platform. It's the foundation upon which global digital communication is built.

Beyond standard alphabets, UTF-8's capacity extends to an astonishing array of symbols. "In addition, you can type emoji, arrows, musical notes, currency symbols, game pieces, scientific and many other types of symbols," states the "Data Kalimat." This vast character repertoire is organized into "Unicode blocks," making it easier to categorize and manage. For instance, "Emoji can be found in the following unicode blocks, Arrows, basic latin, cjk symbols and punctuation, emoticons, enclosed alphanumeric supplement, enclosed alphanumerics, enclosed." This structure allows for the seamless display of everything from a simple 'A' to a complex Chinese character, a musical note, or a smiling emoji, provided all systems involved are correctly configured for UTF-8. The beauty of UTF-8 lies in its ability to represent this entire spectrum of characters while remaining backward compatible with ASCII, meaning English text still takes up minimal space. This universal approach is why UTF-8 is the recommended encoding for all new development and migration projects, drastically reducing the likelihood of encountering the dreaded ठचडी हब4यू.

Practical Solutions: How to Fix ठचडी ह¤¬4यू and Other Encoding Glitches

Fixing character encoding issues, especially when you're seeing ठचडी ह¤¬4यू or similar garbled output, requires a systematic approach. The key is consistency: ensuring that the encoding is declared and honored at every stage of data handling – from creation to storage to display. Here's how to tackle the most common problems:

Web Page Encoding Fixes

If your web pages are displaying characters like ã«, ã, ã¬, ã¹, or ã, despite your efforts, here are the primary areas to check:

- HTTP Headers: The most authoritative way to tell a browser the page's encoding is via the HTTP `Content-Type` header sent by the web server. Ensure your server (Apache, Nginx, IIS) is configured to send `Content-Type: text/html; charset=UTF-8` for HTML files and `Content-Type: application/json; charset=UTF-8` for JSON responses. This often involves editing server configuration files (e.g., `.htaccess` for Apache, or `nginx.conf` for Nginx).

- HTML Meta Tag: As a fallback, include `` as early as possible within the `` section of your HTML document. This is crucial if the HTTP header is missing or incorrect.

- Script Encoding: If you're generating content dynamically with PHP, Python, Node.js, etc., ensure your scripts are saved as UTF-8 and explicitly set the character set before outputting content (e.g., `header('Content-Type: text/html; charset=utf-8');` in PHP).

- Database Connection: If your web page content comes from a database, ensure the connection to the database is also UTF-8 (more on this below).

- Browser Settings: While less common for modern browsers, sometimes a user's browser might override the page's declared encoding. As suggested by the "Data Kalimat," if a page is garbled, "ページ上で右クリック→エンコードで、 日本語(シフトJIS) か、 自動選択 を選んでみてください。 おそらく、UNICODE(UTF-8)が選ば." (Right-click on the page -> Encoding, then try Japanese (Shift_JIS) or Auto-select. Probably UNICODE (UTF-8) is selected.) This allows manual override for testing or in rare cases where the page's declaration is wrong.

Database Encoding Repair (MySQL Focus)

When dealing with a MySQL database that has "muddled" encoding, as described in the "Data Kalimat," fixing the issue can be complex but is crucial for data integrity. "Below you can find examples of ready sql queries fixing most common strange" encoding problems. The goal is to ensure UTF-8 (specifically `utf8mb4` for full emoji support) is used end-to-end:

- Database and Table Encoding:

Run these queries for all relevant databases and tables. Be cautious, as converting existing data can sometimes lead to double-encoding if not handled correctly. It's often safer to export the data, ensure it's correctly encoded, and then re-import it into a newly created, correctly encoded database/table.ALTER DATABASE your_database_name CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci; ALTER TABLE your_table_name CONVERT TO CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci; - Connection Encoding: This is critical. Your application must tell MySQL to use UTF-8 for the connection. For PHP, use `mysqli_set_charset($link, "utf8mb4");` or `PDO::MYSQL_ATTR_INIT_COMMAND => "SET NAMES utf8mb4"`. For other languages, similar functions exist (e.g., `charset=utf8mb4` in connection strings).

- MySQL Server Configuration: In your `my.cnf` or `my.ini` file, ensure the server defaults are set:

Restart MySQL after making changes.[mysqld] character-set-server=utf8mb4 collation-server=utf8mb4_unicode_ci [client] default-character-set=utf8mb4 [mysql] default-character-set=utf8mb4 - Data Export/Import: When exporting data, always specify UTF-8. For `mysqldump`, use `mysqldump --default-character-set=utf8mb4 ... > dump.sql`. When importing, ensure the target database/table is UTF-8 and the import command also specifies the encoding.

File and Application-Level Solutions

Beyond web and database issues, general file handling and application settings can cause garbled text:

- Text Editor Settings: Always save your code files, configuration files, and any text documents as UTF-8. Most modern text editors (like VS Code, Sublime Text, Notepad++, Atom) allow you to specify the encoding when saving or to convert existing files.

- Email Clients: If you're seeing "strange combination of characters in my emails," ensure your email client's outgoing and incoming encoding settings are set to UTF-8. Most clients handle this automatically, but misconfigurations can occur, especially with older clients or specific email providers.

- Operating System Locale: While less common for general web browsing, the operating system's locale settings can influence how applications handle text, particularly in command-line environments. Ensure your system locale is set to a UTF-8 compatible one (e.g., `en_US.UTF-8` on Linux).

Preventing Future Mojibake: Best Practices

The best way to deal with the frustrating appearance of ठचडी ह¤¬4यू and other encoding errors is to prevent them from happening in the first place. Consistency and vigilance are your greatest allies:

- Standardize on UTF-8 Everywhere: Make UTF-8 your default and only character encoding across all layers of your application stack: web servers, application code, databases, configuration files, and even development environments. Avoid mixing encodings.

- Explicitly Declare Encoding: Never assume. Always explicitly declare the character encoding in your HTTP headers, HTML meta tags, database connection strings, and file headers. This leaves no room for ambiguity.

- Validate Input and Output: Implement validation checks to ensure that incoming data is indeed UTF-8, and that outgoing data is correctly encoded before being sent to a client or stored in a database. Libraries and frameworks often provide built-in functions for this.

- Use Modern Tools: Leverage modern programming languages, frameworks, and database versions that have robust UTF-8 support built-in. Older systems may require more manual configuration.

- Educate Your Team: Ensure that everyone involved in content creation, development, and system administration understands the importance of character encoding and the best practices for handling UTF-8. A single misstep can propagate encoding issues throughout an entire system.

Beyond the Bytes: The Importance of Correct Character Display

While fixing characters like ठचडी ह¤¬4यू might seem like a purely technical challenge, its implications extend far beyond mere bytes and code. The correct display of characters is fundamental to several critical aspects of digital interaction:

- User Experience: Mojibake is a jarring and unprofessional experience. It immediately erodes user trust and makes content unreadable, leading to frustration and abandonment. A seamless, readable experience is paramount for engagement.

- Data Integrity: Corrupted characters are corrupted data. If your database stores "ठप+बठॠठॠठ+तॠ+ठॠल+ठॠलनॠ+सॠ+रॠठ+नहॠठ+थà" instead of meaningful text, that information is lost or severely compromised. This can have serious consequences for business operations, analytics, and legal compliance.

- Global Reach and Accessibility: In an increasingly interconnected world, content needs to be accessible to a global audience. Proper UTF-8 implementation ensures that users from any linguistic background can read and interact with your content without encountering barriers. This is especially true for search engines and international SEO; if your content is garbled, it won't be indexed or understood correctly.

- Brand Reputation: Websites or applications that consistently display character errors appear unprofessional and unreliable. This can significantly damage a brand's reputation and credibility in the digital space.

Understanding and mastering character encoding is not just a technical detail; it's a foundational skill for anyone working with digital content, ensuring that information is conveyed accurately and effectively to its intended audience.

Conclusion: Mastering the Digital Alphabet

The appearance of strange, garbled text like ठचडी ह¤¬4यू, ã«, or ã is a clear signal: your digital systems are speaking different languages. This article has illuminated the underlying causes of these character encoding errors, from mismatched HTTP headers and database configurations to inconsistent file handling. We've seen how the universal standard of Unicode and its most popular encoding, UTF-8, offers the robust solution needed for a truly global and readable internet.

By implementing the practical solutions discussed – ensuring consistent UTF-8 declarations in web servers, HTML, and database connections, and adopting best practices across all your digital assets – you can effectively banish mojibake from your experience. Mastering character encoding isn't just about fixing a technical glitch; it's about preserving data integrity, enhancing user experience, and ensuring your digital communications are clear, accurate, and accessible to everyone, everywhere. Don't let your valuable content get lost in translation. Take control of your character encoding today!

Have you encountered similar encoding nightmares? Share your experiences and solutions in the comments below! If this article helped you untangle your digital jumble, consider sharing it with others who might be struggling with the same issues. For more insights into web development and data management, explore other articles on our site.

![[ Tutoriel ] - Faire le a majuscule accent grave (À) avec le clavier](https://demarcheasuivre.com/wp-content/uploads/2022/09/comment-taper-un-a-majuscule-avec-accent-grave.gif)

[ Tutoriel ] - Faire le a majuscule accent grave (À) avec le clavier

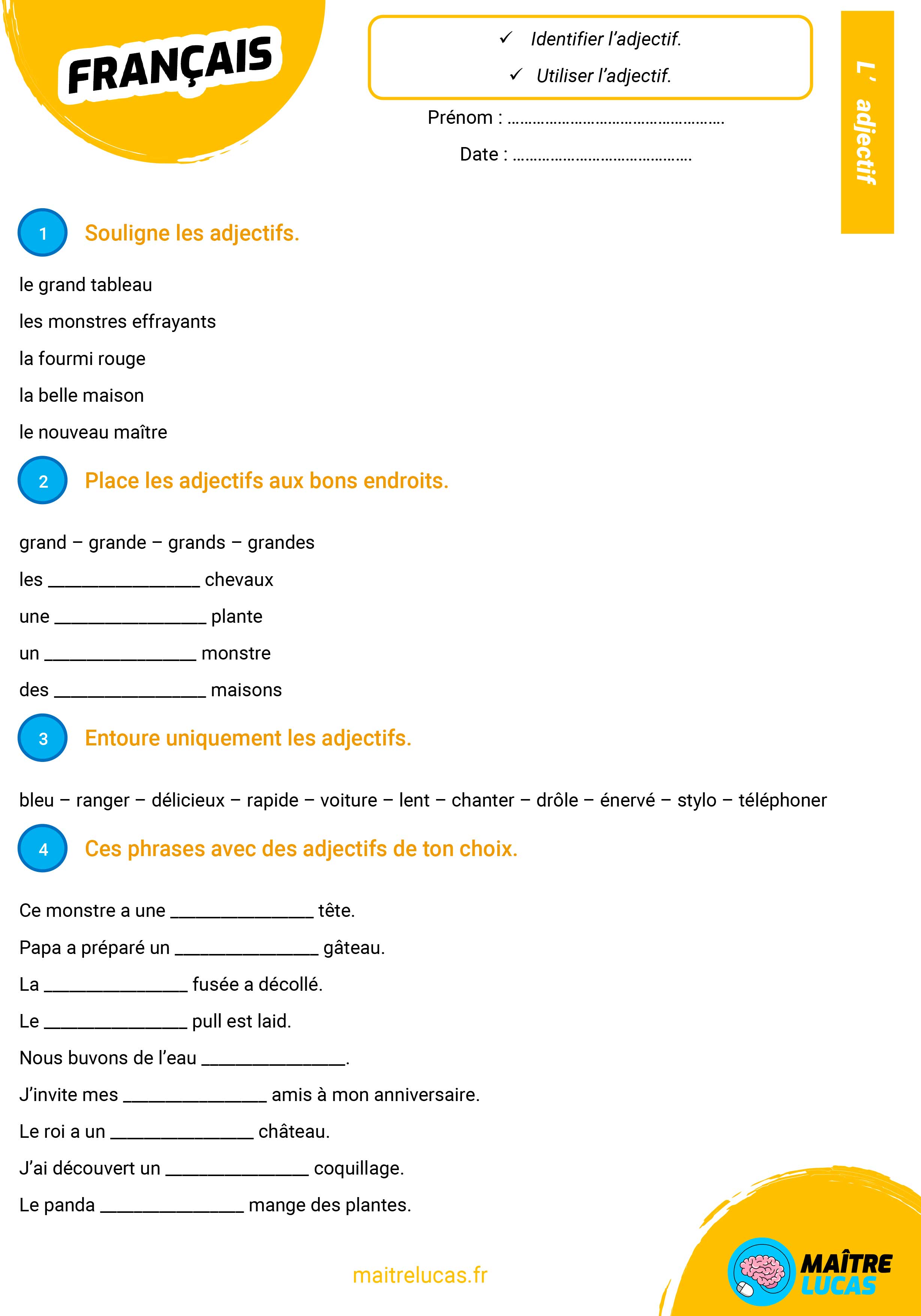

Fiches Exercices les adjectifs CE2 - Maître Lucas

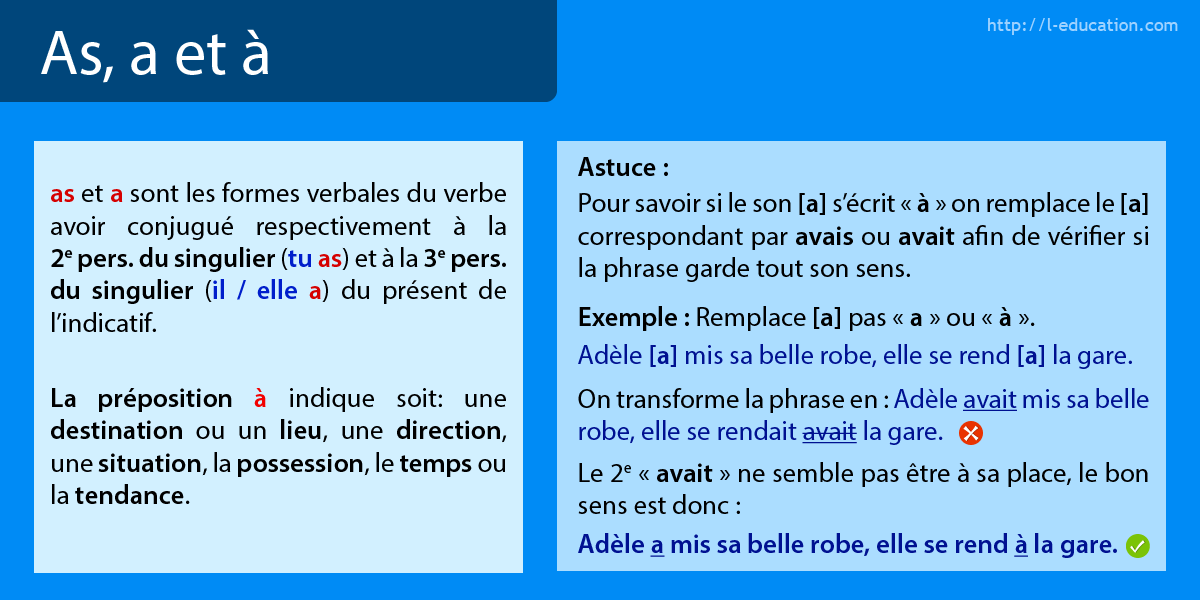

les homophones de cour